Workflow Engine

At the heart of the Zenoo Hub is a workflow engine that executes Hub DSL scripts.

Workflow Execution Engine

At the heart of the Zenoo Hub is a workflow engine that executes Hub DSL scripts.

These DSL scripts are then used for orchestrating corresponding digital-onboarding processes as a series of pages (routes), external calls, etc.

The DSL scripts are versioned and stored in the component repository as Hub components.

This approach makes it possible to make changes on-the-fly without having to rebuild and redeploy the Zenoo Hub.

There are two types of executable DSL scripts:

- workflow, a series of user interactions, data transformations and external calls,

- function, a series of external calls and data transformation.

Execution context

Each workflow execution is assigned an Execution Context that stores the current state of the execution.

An Execution context stores for following:

- UUID, unique execution ID,

- parent UUID, parent execution UUID, set when executing sub-workflows/functions,

- sharable token, that was used for starting the execution,

- Execution events, generated throughout the execution, see Execution events,

- Context attributes, stores execution JSON-like data, see Context attributes.

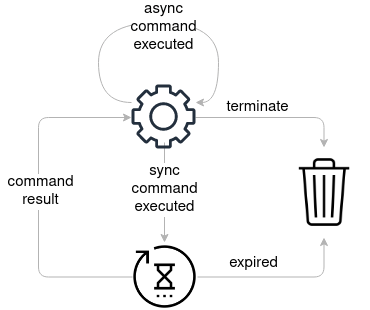

Execution life-cycle

A new execution is triggered by an Execute request.

Typically, an Execute request is generated by a Hub Client via the Hub Client API.

In addition, executions may produce Execute requests to trigger sub-workflow, function or route function executions.

An execution is terminated when one of the following criteria is met:

- a terminal route is executed,

- result() or error() command is executed,

- the whole DSL script is executed.

An execution becomes expired when the execution duration exceeds the configured corresponding expiration, see here.

When an execution terminates or expires, the corresponding execution context is discarded.

Execution model

An execution can be thought of as a series of DSL commands based on the DSL script being executed.

When a command finishes, the corresponding command result gets stored as context attribute using the command namespace setting.

Once a command result is set, it can be used by subsequent commands and for making flow control decisions.

Notes to expand:

- sync and async commands

- sub-workflow, a/sync functions, route functions

- going back, route checkpoint

- route submit validation

- exchange/route payload reduction

- exchange error handling, fallback, validation

Execution processors

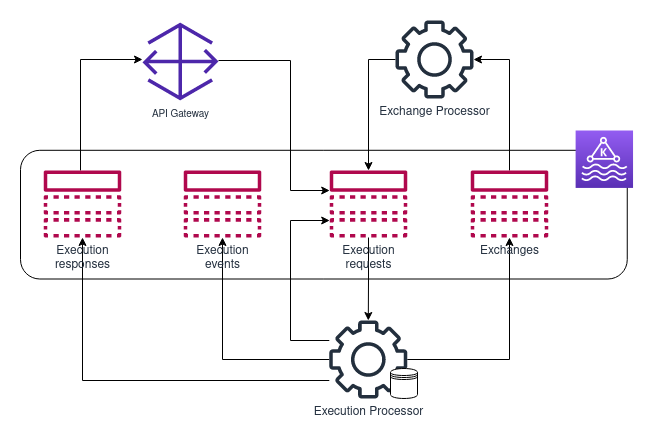

At the core, the execution engine uses a stateful Kafka Streams processor to process incoming execution requests and produce corresponding responses.

It uses a state store to persist and retrieve corresponding Execution contexts. Leveraging Kafka fault-tolerance capabilities, a replicated changelog topic is maintained to track any state updates.

The Execution processor processes incoming Execution requests stored in execution-requests Kafka topic.

There are several ways execution requests are produced:

- API Gateway via the Hub Client API,

- Exchange Processor to submit connector results,

- Execution Processor to trigger a child execution.

Each execution produces a detailed log of Execution events stored in execution-events Kafka topic.

These include life-cycle events, execution requests, responses, errors, executed commands, results etc.

The Execution processor produces Execution responses stored in execution-responses Kafka topic. These include routes, function results and errors.

The API Gateway uses the execution responses for corresponding request queries, see Request API.

In addition, the Execution processor produces Exchange requests stored in exchanges Kafka topic that are handled by the Exchange Processor.

Updated 4 months ago